Christos Kozyrakis

Professor, EE & CS, Stanford University

I am broadly interested in Computer Systems Architecture, the hardware, the system software, and interactions between the two that allow me to make systems faster, greener, and cheaper. The focus of my recent work is on cloud management technology, fast operating systems, and energy efficient compute and memory structures for emerging workloads. I also like building and debugging full-system prototypes of all types.

For an up-to-date summary of current research, please visit the MAST webpage. We conduct research within the Stanford Platform Lab and the Pervasive Parallelism Lab.

Completed Projects

EPIC: Efficiency and Proportionality in the Cloud

Energy consumption has emerged as the major limitation for data-centers in terms of operational cost, scalability, reliability, and environmental impact. The EPIC project is investigating hardware and software techniques that improve the energy efficiency and proportionality of data centers. Some aspects of our work include state-aware scale-down techniques, novel server designs, energy efficient memory systems, and data center workload characterization and modeling. The EPIC project follows up on our recent work on energy modeling, benchmarking, and server design (JouleSort project)

Transactional Memory (2002-2010)

The Transactional Coherence and Consistency (TCC) project aims to simplify parallel programming using transactional memory (TM). With TM, programmers simply request that code segments operating on shared data execute atomically and in isolation. Concurrency control as multiple transactions execute in parallel is the responsibility of the system. TCC uses "all transactions, all the time" as, at the hardware-level, there is no execution outside of transactions. Transactions are the unit of parallel work, synchronization, coherence, consistency, and failure recovery. Our work spans all aspects of TM technology, from hardware support and prototypes to programming models and applications.

PowerNet: a Magnifying Glass for Computing Systems' Energy

The PowerNet project aims to characterize the energy consumption of enterprise-style computing infrastructures including user machines, as well as networking, storage, and server equipment. We collect fine-grained power consumption data using wireless powermeters and correlate them to device utilization and system patterns to identify waste and opportunities for reducing energy costs of everyday computing. Currently, our hybrid network of power meters is monitoring 138 devices in Gates Hall, consuming 9,463 watthours.

Secure Systems (2006-2010)

We are using tagged memories to create robust, high-performance frameworks for software security. Raksha provides hardware support for dynamic information flow tracking (DIFT) in order to protect deployed user-level programs and the operating systems from attacks ranging from buffer overflow to SQL injections. Loki implements fine-grain, protection for physical memory in order to reduce the trusted code base of HiStar, an operating system that prevents information leaks. Our work ranges from hardware support to security policies and includes the development of full-system prototypes.

The Cascade Project (2002 - 05)

The Cray Cascade project targeted a practical PetaFlop supercomputing system for the national security and industrial user community in the 2007 – 2010 timeframe. The effort included researchers from Cray, Stanford, Caltech, and Notre Dame and was funded by DARPA IPTO within the HPCS program. Our work focused the development of a scalable micro-architecture for vector processors as well as flexible support for data-level and thread-level parallelism in large-scale supercomputing systems.

The Intelligent RAM Project (1996 - 2002)

The IRAM project at UC Berkeley developed an embedded processor architecture that combined high multimedia performance, low power consumption, and low design complexity. The key features of the IRAM architectures are vector processing and embedded memory technology. IRAM was mostly funded by DARPA IPTO within the DIS program.

The ATLAS ATM Switch (1995 - 96)

The ATLAS project at ICS-FORTH developed a single-chip gigabit ATM switch with optional credit-based flow control. The ATLAS switch is a general-purpose building block for high-speed communication in wide (WAN), local (LAN), and system (SAN) area networking, supporting a mixture of services from real-time, guaranteed quality-of-service to best-effort, bursty and flooding traffic, in a range of applications from telecom to multimedia and multiprocessor NOW. Funding was provided by the European Union.

The Telegraphos II Switch (1994 - 95)

The Telegraphos II project used RISC principles to develop an architecture for high speed computer communication for workstation clusters. Funding was provided by the European Union.

Prototype Systems and Chips

The CoolSort Energy-efficient Server

The CoolSort server was assembled at Stanford, in collaboration with researchers at HP Labs. It uses a notebook processor (Core2 Duo) and 13 notebook servers (Hitachi TravelStar) to create an energy efficient system for sorting. CoolSort was the first system to be listed as winner of the Joule category in the Sort Benchmark, able to sort 11,300 records/joule. This represents a three times improvement over other available systems at the time (2007).

The Raksha and Loki Security Systems

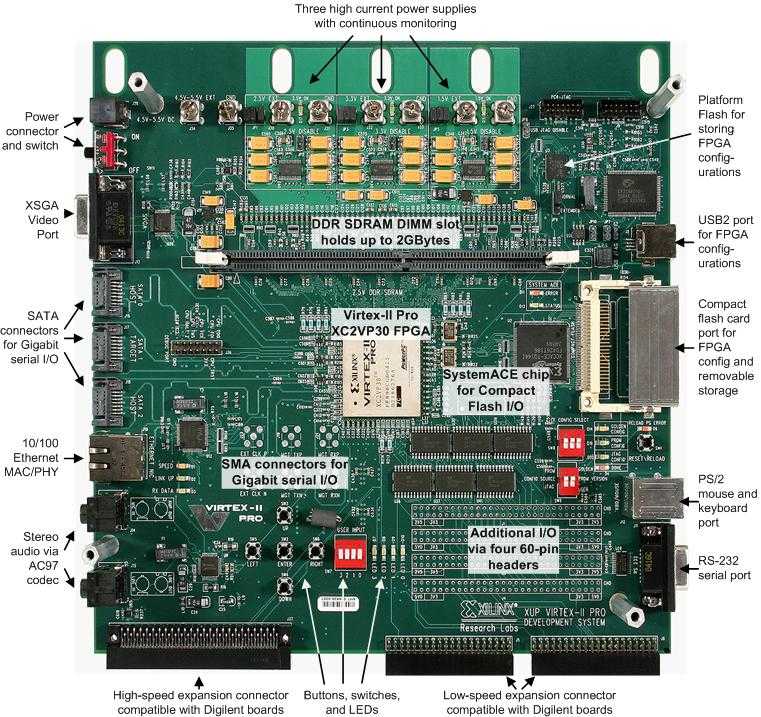

Raksha and

Loki were developed at Stanford. Raksha is an architecture with

hardware support for dynamic information flow tracking. Every memory

word is extended by 4 bits to allow for programmable policies that

track and detect illegal uses of unstructed information. Raksha was

used to detected security attacks such as buffer overflows and SQL

injections on binary applications and the Linux operating system. Loki

associates each memory word with a 32-bit label, used to implement

fine-grain protection on physical memory. Loki was used to reduce the

trusted code base of the HiStar operating system. We prototyped both

architectures by modifying the Leon-3 SPARC core and mapping it to a

Xilinx FPGA board.

Raksha and

Loki were developed at Stanford. Raksha is an architecture with

hardware support for dynamic information flow tracking. Every memory

word is extended by 4 bits to allow for programmable policies that

track and detect illegal uses of unstructed information. Raksha was

used to detected security attacks such as buffer overflows and SQL

injections on binary applications and the Linux operating system. Loki

associates each memory word with a 32-bit label, used to implement

fine-grain protection on physical memory. Loki was used to reduce the

trusted code base of the HiStar operating system. We prototyped both

architectures by modifying the Leon-3 SPARC core and mapping it to a

Xilinx FPGA board.

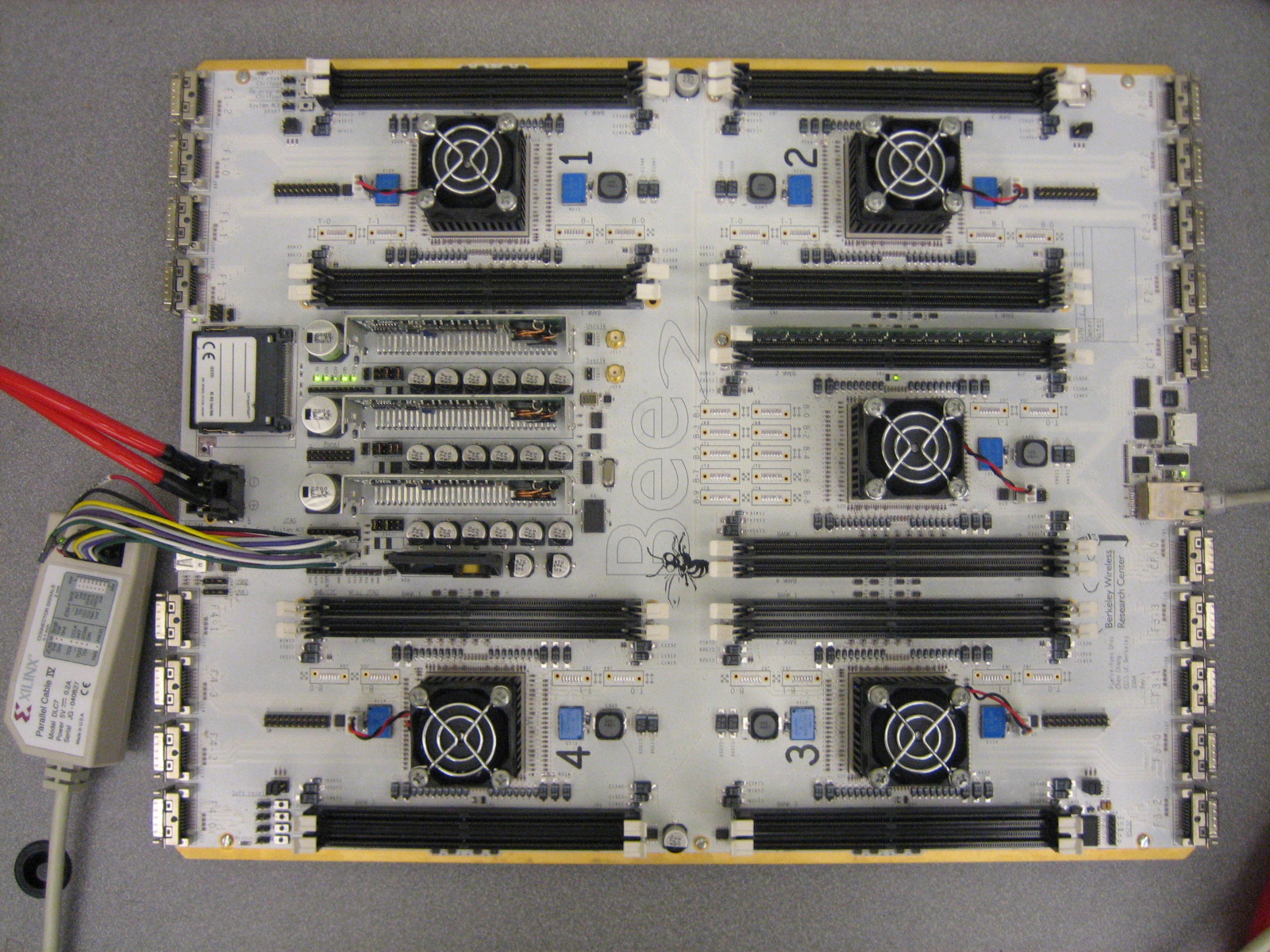

The Atlas HTM System

ATLAS was developed at Stanford within the TCC project. It is a FPGA-based prototype of the TCC architecture on the BEE2 board. It includes 8 PPC405 processor with synthesized caches that provide hardware support for transactional version management and conflict detection. A 9th processor runs the Linux operating system. Apart from hardware support for TM, ATLAS supports features such as performance monitoring and deterministic replay. The design is also known as "RAMP Red".

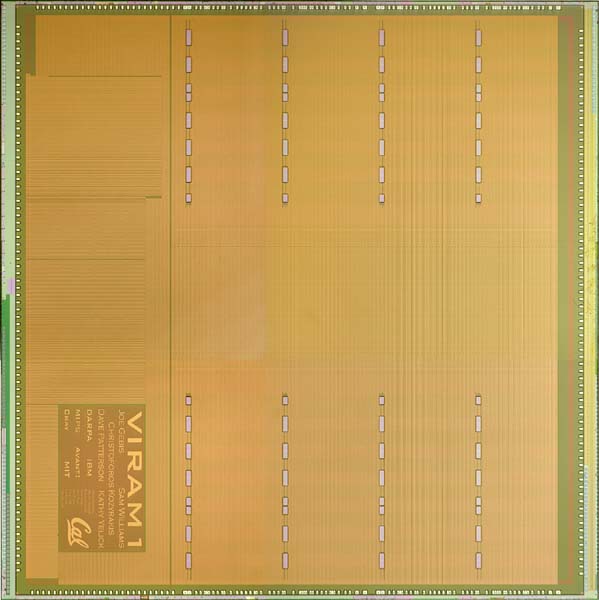

The VIRAM Processor

The VIRAM processor was developed at UC Berkeley within the IRAM project. It is a media-oriented vector processor with an integrated main memory system. It includes a 64-bit MIPS5Kc processor and a vector coprocessor with 4 lanes. It can reach up to 9.6 Gops/sec at 200MHz and has an on-chip DRAM capacity of 13Mbytes. The design includes 125 million transistors in a 324mm^2 chip. It was fabricated in 2003 in a 0.18um embedded DRAM CMOS process by IBM.

The VIRAM Test Chip

The VIRAM Test Chip was developed at UC Berkeley within the IRAM project. Its purpose was to combine embedded DRAM with high-speed logic and signaling. It was fabricated in 1998 in a 0.35um DRAM CMOS process by LG and it included approximately 10 million transistors.

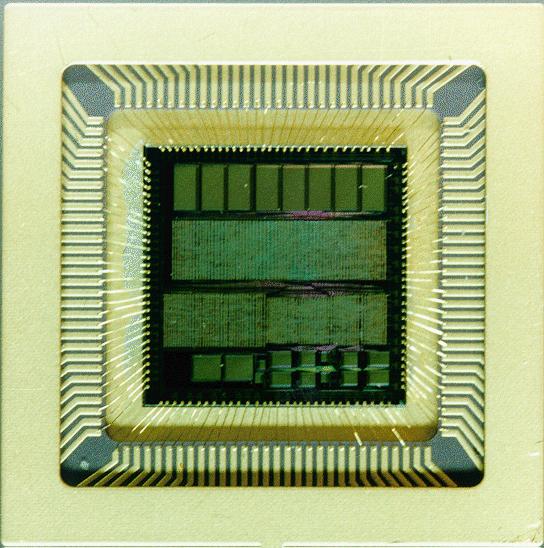

The ATLAS Switch

ATLAS was developed by ICS/FORTH in Greece. It is a 10Gb/s single-chip ATM switch with credit-based flow control and sub-microsecond cut-through latency. The design includes 6 million transistors in a 225mm^2 chips. It was fabricated in 1999 in a 0.35um CMOS process by ST.

The Telegraphos II Switch

Telegraphos II was developed by ICS/FORTH in Greece. This is a single-chip switch for distributed shared memory multiprocessors. The design includes 0.6 million transistors in a 72mm^2 chip. It was fabricated in 1995 in a 0.7um CMOS process.